Introduction

“I need to know what this will do to my production systems before I run it.” – Ask a Systems Administrator why they want dry-run mode in a management tool, and this is the answer you’ll get almost every single time.

Historically, we have been able to use dry-run as a risk mitigation strategy before applying changes to machines. Dry-run is supposed to report what a tool would do, so that the administrator can determine if it is safe to run. Unfortunately, this only works if the reporting can be trusted as accurate.

In this post, I’ll show why modern configuration management tools behave differently than the classical tool set, and why their dry-run reporting is untrustworthy. While useful for development, it should never be used in place of proper testing.

make -n

Many tools in a sysadmin’s belt have a dry-run mode. Common utilities like make, rsync, rpm, and apt all have it. Many databases will let you simulate updates, and most disk utilities can show you changes before making them.

The make

utility is the earliest example I can find of an automation tool with

a dry-run option. Dry-run in make -n works by building a list of

commands, then printing instead of executing them. This is useful

because it can be trusted that make will always run the exact same

list in real-run mode. Rsync and others behave the same way.

Convergence based tools, however, don’t build lists of commands. They build sets of convergent operators instead.

Convergent Operators, Sets and Sequence

Convergent operators ensure state. They have a subject, and two sets of instructions. The first set are tests that determine if the subject is in the desired state, and the second set takes corrective actions if needed. Types are made by grouping common tests and actions. This allows us to talk about things like users, groups, files, and services abstractly.

CFEngine promise bundles, Puppet manifests, and Chef recipes are all sets of these data structures. Putting them into a feedback loop lets them cooperate over multiple runs, and enables the self-healing behavior that is essential when dealing with large amounts of complexity.

During each run, ordered sets of convergent operators are applied against the system. How order is determined varies from tool to tool, but it is ordered none the less.

Promises and Lies

CFEngine models Promise Theory

as a way of doing systems management. While Puppet and Chef do not model

promise theory explicitly, it is still useful to borrow its vocabulary

and metaphors and think about individual, autonomous agents that

promise to fix the things they’re concerned with.

CFEngine models Promise Theory

as a way of doing systems management. While Puppet and Chef do not model

promise theory explicitly, it is still useful to borrow its vocabulary

and metaphors and think about individual, autonomous agents that

promise to fix the things they’re concerned with.

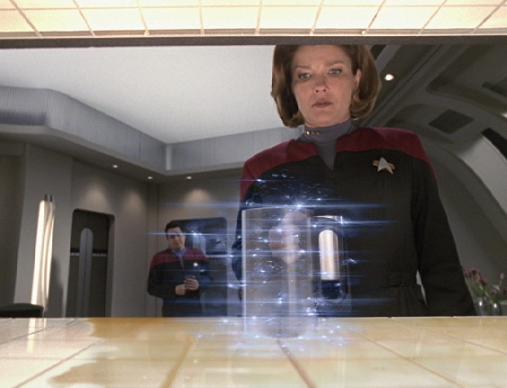

When writing policy, imagine every resource statement as a simple little robot. When the client runs, a swarm of these robots run tests, interrogate package managers, inspect files, and examine process tables. Corrective action is taken only when necessary.

When dealing with these agents, it can sometimes seem like they’re lying to you. This raises a few questions. Why would they lie? Under what circumstances are they likely to lie? What exactly is a lie anyway?

A formal examination of promises does indeed include the notion of lies. Lies can be outright deceptions, which are the lies of the rarely-encountered Evil Robots. Lies can also be “non-deceptions”, which are the lies of occasionally-encountered Broken Robots. Most often though, we experience lies from the often-encountered Merely Mis-informed Robots.

The Best You Can Do

The best you can possibly hope to do in a dry-run mode is to build the operator sequences, then interrogate each one about what they would do to repair the system at that exact moment. The problem with this is, in real-run mode, the the system is changing between the tests. Quite often, the results of any given test will be affected by a preceeding action.

Configuration operations can have rather large side effects. Sending signals to processes can change files on disk. Mounting a disk will change an entire branch of a directory tree. Packages can drop off one or a million different files, and will often execute pre or post-installation scripts. Installing the Postfix package on an Ubuntu system will not only write the package contents to disk, but also create users and disable Exim before automatically starting the service.

Throw in some notifications and boolean checks and things can get really interesting.

Experiments in Robotics

To experiment with dry-run mode, I wrote a Chef cookbook that configures a machine with initial conditions, then drops off CFEngine and Puppet policies for dry-running.

Three configuration management systems, each with conflicting policies, wreaking havoc on a single machine sounds like a fun way to spend the evening. Lets get weird.

If you already have a Ruby and Vagrant environment setup on your workstation and would like to follow along, feel free. Otherwise, you can just read the code examples by clicking on the provided links as we go.

Clone out the dry-run-lies cookbook from Github, then bring up a Vagrant box with Chef.

1 2 3 4 5 | |

CFEngine –dry-run

When Chef is done configuring the machine, log into it and switch to

root. We can test the /tmp/lies-1.cf policy file by running cf-agent with the -n flag.

1 2 3 | |

Dry-run mode reports that it would run an echo command in bundle_one.

Let’s remove -n and see what happens.

1 2 3 4 5 | |

Wait a sec… What’s all this bundle_three business? Did dry-run just lie to me?

Examine the lies-1.cf file here.

The policy said three things. First, “echo hello from bundle one if /usr/bin/puppet does NOT exist”. Second, “make sure the puppet package is installed”. Third, “echo hello from bundle three if /usr/bin/puppet exists.”

In dry-run mode, each agent was interrogated individually. This resulted in a report leading us to believe that only one “echo hello” would be made, when in reality, there were two.

Puppet –noop

Let’s give Puppet a spin. We can test the policy at /tmp/lies-1.pp with the

--noop flag to see what Puppet thinks it will do.

1 2 3 4 5 6 | |

Dry-run reports that there is one resource to fix. Excellent. Let’s

remove the --noop flag and see what happens.

1 2 3 4 5 6 7 | |

Like the CFEngine example, we have the real-run doing things that were not listed in the dry-run report.

The Chef policy that set up the initial machine state mounted an NFS

directory into /mnt/nfssrv. When interrogated during dry-run, the

tests in the file resources saw that the files were present, so they

did not report that they needed to be fixed. During the real-run,

Puppet unmounts the directory, changing the view of the filesystem and

the outcome of the tests.

Check out the policy here.

It should be noted that Puppet’s resource graph model does nothing to enable noop functionality, nor can it affect its accuracy. It is used only for the purposes of ordering and ensuring non-conflicting node names within its model.

Chef –why-run

Finally, we’ll run the original Chef policy with the -W flag to see if it lies like the others.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 | |

Seems legit. Let’s remove the --why-run flag and do it for real.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 | |

Right. “HACKING THE PLANET” was definitely not in the dry-run output. Let’s go figure out what happened. See the Chef policy here.

Previously, our CFEngine policy had installed Puppet on the machine. Our Puppet policy ensured nmap was absent. Chef will install nmap, but only if the Puppet binary is present in /usr/bin.

Running Chef in --why-run mode, the test for the 'package[nmap]' resource

succeeds because of the pre-conditions set up by the CFEngine policy.

Had we not applied that policy, the 'execute[hack the planet]'

resource would still not have fired because nothing installs nmap

along the way. In real-run mode, it succeeds because Chef changes the

machine state between tests, but would have failed if we had never ran

the Puppet policy.

Yikes.

Okay, So What?

The robots were not trying to be deceptive. Each autonomous agent told us what it honestly thought it should do in order to fix the system. As far as they could see, everything was fine when we asked them.

As we automate the world around us, it is important to know how the systems we build fail. We are going to need to fix them, after all. It is even more important to know how our machines lie to us. The last thing we need is an army of lying robots wandering around.

Luckily, there are a number of techniques for testing and introducing change that can be used to help ensure nothing bad happens.

Keeping the Machines Honest

Testing needs to be about observation, not interrogation. In each case, the system converged to the policy, regardless of whether dry-run got confused or not. If we can setup up test machines that reproduce a system’s state, we can real-run the policy and observe the behavior. Integration tests can then be written to ensure that the policy achieves what it is supposed to.

Ideally, machines are modeled with policy from the ground up, starting with Just Enough Operating System to allow them to run Chef. This ensures all the details of a system have been captured and are reproducible.

Other ways of reproducing state work, but come with the burden of having to drag that knowledge around with you. Snapshots, kickstart or bootstrap scripts, and even manual configuration will all work as long as you can promise they’re accurate.

There are some situations where reproducing a test system is impossible, or modeling it from the ground up is not an option. In this case, a slow, careful, incremental application of policy, aided by dry-run mode and human intuition is the safest way to start. Chef’s why-run mode can help aide intuition by publishing assumptions about what’s going on. “I would start the service, assuming the software had been previously installed” helps quite a bit during development.

Finally, increasing the resolution of our policies will help the most in the long term. The more robots the better. Ensuring the contents of your configuration files is good. Making sure that they are only ones present in a conf.d directory is better. As a community, we need to produce as much high quality, trusted, tested, and reuseable policy as possible.

Good luck, and be careful out there.

-s

Two tasks that systems administrators concern themselves with doing are dependency analysis and runtime configuration.

Two tasks that systems administrators concern themselves with doing are dependency analysis and runtime configuration.

The natural progression away from manual configuration was custom scripting. Scripting reduced management complexity by automating things using languages like Bash and Perl. Tutorials and documentation instruction like “add the following line to your /etc/sshd_config” were turned into automated scripts that grepped, sed’ed, appended, and clobbered. These scripts were typically very brittle and would only produce desired outcome after their first run.

The natural progression away from manual configuration was custom scripting. Scripting reduced management complexity by automating things using languages like Bash and Perl. Tutorials and documentation instruction like “add the following line to your /etc/sshd_config” were turned into automated scripts that grepped, sed’ed, appended, and clobbered. These scripts were typically very brittle and would only produce desired outcome after their first run. File distribution was the next logical tactic. In this scheme, master copies of important configuration files are kept in a centralized location and distributed to machines. Distribution is handled in various ways. RDIST, NFS mounts, scp-on-a-for-loop, and rsync pulls are all popular methods.

File distribution was the next logical tactic. In this scheme, master copies of important configuration files are kept in a centralized location and distributed to machines. Distribution is handled in various ways. RDIST, NFS mounts, scp-on-a-for-loop, and rsync pulls are all popular methods. In this scheme, autonomous agents run on hosts under management. The word autonomous is important, because it stresses that the machines manage themselves by interpreting policy remotely set by administrators. The policy could state any number of things about installed software and configuration files.

In this scheme, autonomous agents run on hosts under management. The word autonomous is important, because it stresses that the machines manage themselves by interpreting policy remotely set by administrators. The policy could state any number of things about installed software and configuration files. The logic that generates configuration files has to be executed somewhere. This is often done on the machine responsible for hosting the file distribution. A better place is directly on the nodes that need the configurations. This eliminates the need for distribution entirely.

The logic that generates configuration files has to be executed somewhere. This is often done on the machine responsible for hosting the file distribution. A better place is directly on the nodes that need the configurations. This eliminates the need for distribution entirely.